The Brief: Working in teams of 3-4, create a digital twin model(s) using one another as your datasets and various machine learning software examples. Using yourselves as a medium, how can you use machine learning to extend one another’s attributes, mix, mash, and collaborate and formulate new in-between aesthetics from these various processes?

Fanxuan, Noah and I worked together to create a "pop star" based on blended datasets of us performing as Lady Miss Kier from the opening to the Deee-Lite video, Groove is in the Heart.

Sketching out the idea in Figma:

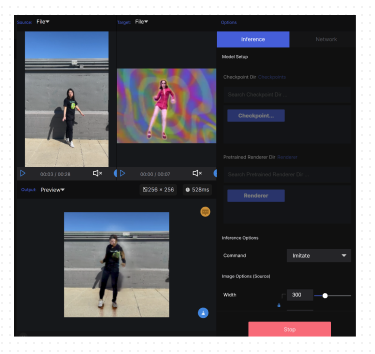

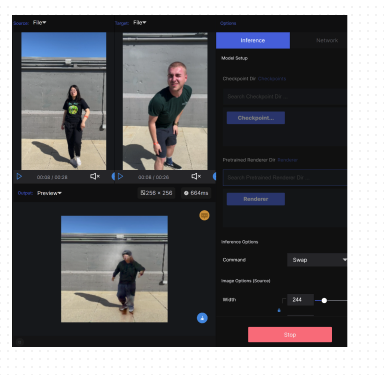

Our Approach: Our team used RunwayML's Liquid Warping GAN. This machine learning program takes the movement of one reference and applies it to the second reference.

Data Sets:

Tests:

Final Video:

AI Voice Fail:

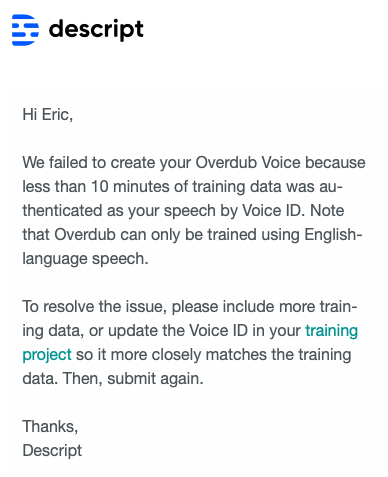

We attempted to use the tool Descript to make an AI voice. Since we were not all together, we created audio tracks that included little bits of each of our voices, but Descript was unalbe to create a voice through this method.

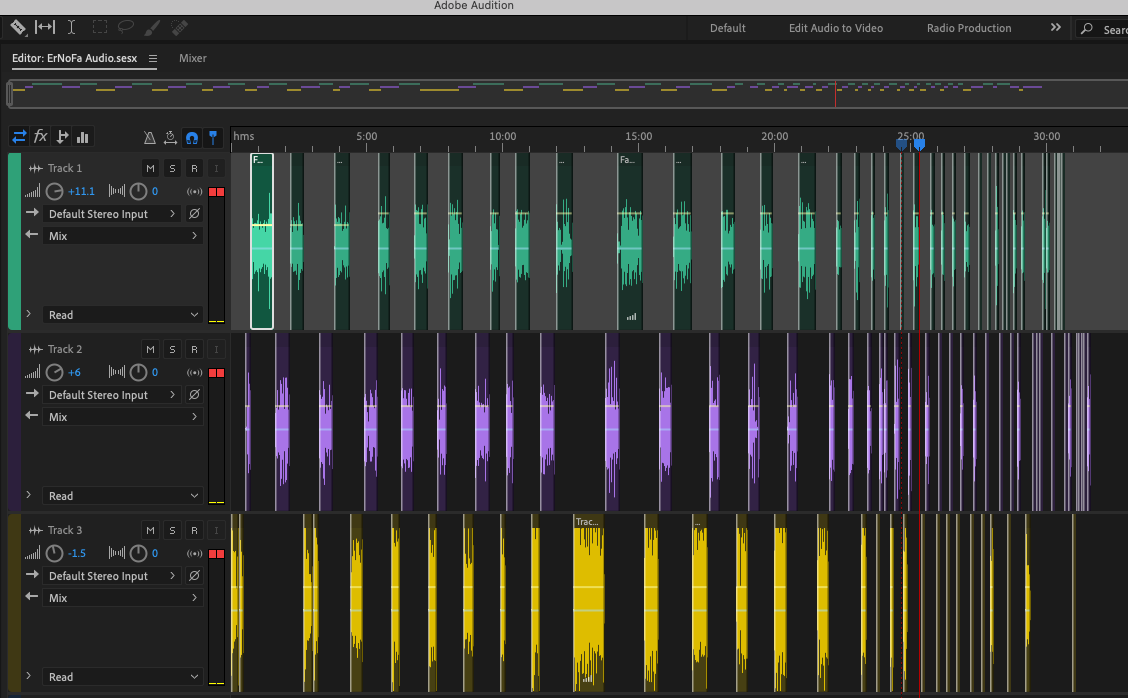

Mixing Tracks in Adobe Audition:

Our Verification Track:

A Nice Message From Descript:

AI Voice Fail Part 2:

We tried to use Resemble AI to create a voice with the three of us in person, but the service was not working

We also tried to use Descript with the three of us in person, but it also failed.

About Our Datasets: Because our datasets are limited to videos of ourselves—as shown above—we decided they were not of value to the public.